Observing default behavior: JSON is indented, and we pay for it

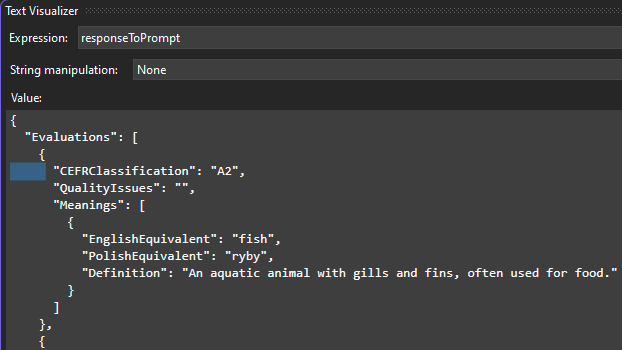

While working with ChatGPT API, I noticed that the JSON response I normally receive contains many whitespace characters used for indentation. Here’s a fragment of the response as an illustration:

Output tokens still cost quite a lot in 2024 (assuming we process lots of data), so I wanted to test the hypothesis that we can reduce the cost by cutting out the unnecessary whitespace.

Experiment asking ChatGPT (gpt-3.5-turbo) to return non-indented JSON

Default JSON response

My baseline is a prompt being sent using the official OpenAI NuGet package. I also set the following header to request a response in JSON format:

ChatCompletionOptions options = new ChatCompletionOptions()

{

ResponseFormat = ChatResponseFormat.JsonObject

};Code language: JavaScript (javascript)

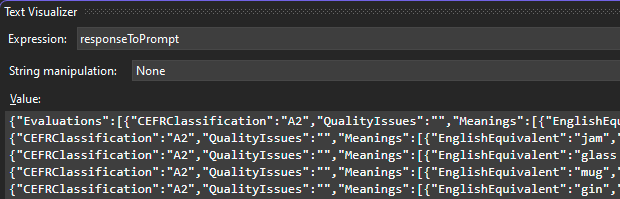

Asking explicitly for no indentation

I added the following sentence to my prompt: Please don't use indentation in a response.

This indeed changed the response to contain no whitespace:

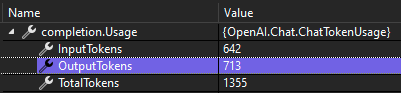

But did the token counter change? Let’s take a look:

As you can see:

- InputTokens increased slightly (I had to add one sentence to the prompt), but the cost here is marginal

- OutputTokens now cost me only 57% of the original price!

Paying $57 instead of $100 doesn’t sound bad, eh? And if the JSON structure has more nesting, savings can be even higher.

I hope you spend your savings on something fun!

Appendix: can you do the same with gpt-4o?

I tested the above optimization with the gpt-3.5-turbo model. However, the same prompt used against gpt-4o didn’t succeed. The model ignored the “Please don't use indentation in a response.” command, and instead, it followed the indentation pattern from the output example in my prompt.

When I changed the output example to contain no indentation, I observed similar results – no indentation in output and much fewer output tokens billed.

No comments yet, you can leave the first one!