I’ll share the results of a quick benchmark testing how the number of files in a directory impacts the following filesystem operations executed from a .NET application:

File.Exists(fileName)File.WriteAllText(uniqueFileName, content)

My motivation was to decide if it was a good design performance-wise to have a single directory containing many cache files. I’m thinking about the order of magnitude of a million files in a directory. An alternative could be to split the files into many subdirectories, for example, by hashing them and randomly assigning them to one of many buckets.

Large directories take noticeably longer to load in File Explorer (it can take seconds). But does a large number of items impact operations that require access to only a single file in such a directory (without the need to list them all)? Let’s test.

File.Exists(fileName)

In this test, I checked how the number of items in a directory impacts the execution time of the File.Exist() operation. I tested both scenarios – whether the tested file existed or not. The directories had the following number of files, respectively:

- Small: 1 file

- Large: 20 001 files

- Very large: 1 000 001 files

For clarity, the benchmark was a set of simple tests like:

[Benchmark]

public void FileExist_ExistingFileInAlmostEmptyDirectory_Ntfs() => File.Exists("d:\\testfiles\\small\\file.webp");

[Benchmark]

public void FileExist_ExistingFileAmong_20_000Files_Ntfs() => File.Exists("d:\\testfiles\\large\\file.webp");

[Benchmark]

public void FileExist_ExistingFileAmong_1_000_000Files_Ntfs() => File.Exists("d:\\testfiles\\verylarge\\file.webp");

// ...Code language: C# (cs)Here’s a result of a test performed using Benchmark .NET:

BenchmarkDotNet v0.13.10, Windows 11 (10.0.22631.2861/23H2/2023Update/SunValley3)

AMD Ryzen 7 3700X, 1 CPU, 16 logical and 8 physical cores

.NET SDK 8.0.100

[Host] : .NET 8.0.0 (8.0.23.53103), X64 RyuJIT AVX2

DefaultJob : .NET 8.0.0 (8.0.23.53103), X64 RyuJIT AVX2

| Method | Mean | Error | StdDev |

|--------------------------------------------------------- |----------:|----------:|----------:|

| FileExist_ExistingFileInAlmostEmptyDirectory_Ntfs | 2.531 us | 0.0496 us | 0.0772 us |

| FileExist_ExistingFileAmong_20_000Files_Ntfs | 2.444 us | 0.0058 us | 0.0051 us |

| FileExist_ExistingFileAmong_1_000_000Files_Ntfs | 2.468 us | 0.0209 us | 0.0195 us |

| FileExist_ExistingFileInAlmostEmptyDirectory_DevDrive | 2.031 us | 0.0139 us | 0.0130 us |

| FileExist_ExistingFileAmong_20_000Files_DevDrive | 2.031 us | 0.0092 us | 0.0086 us |

| FileExist_ExistingFileAmong_1_000_000Files_DevDrive | 10.585 us | 0.0543 us | 0.0508 us |

| FileExist_NonExistingFileInAlmostEmptyDirectory_Ntfs | 2.666 us | 0.0114 us | 0.0101 us |

| FileExist_NonExistingFileAmong_20_000Files_Ntfs | 4.702 us | 0.0073 us | 0.0068 us |

| FileExist_NonExistingFileAmong_1_000_000Files_Ntfs | 7.130 us | 0.0295 us | 0.0276 us |

| FileExist_NonExistingFileInAlmostEmptyDirectory_DevDrive | 2.912 us | 0.0104 us | 0.0097 us |

| FileExist_NonExistingFileAmong_20_000Files_DevDrive | 6.001 us | 0.0328 us | 0.0307 us |

| FileExist_NonExistingFileAmong_1_000_000Files_DevDrive | 10.652 us | 0.0252 us | 0.0197 us |Code language: JavaScript (javascript)Some of my conclusions here:

- Performance of the

File.Exists()operation in C# is generally consistent and fast, even when dealing with directories containing many files. In a folder with 1,000,000 files, we’re still talking about microseconds. - The performance of the

File.Exists()operation seems negatively impacted by how many files are present in the tested directory, but the degradation is slow and far from linear. - The operation typically takes longer if the file does not exist, than if it exists.

- I don’t think comparing NTFS and ReFS (DevDrive) performance is reasonable here because partitions are located on different SSD drives and placed in different PCIe slots. However, I think it’s fair to notice that a DevDrive, which is located on my fastest SSD in a native M.2 slot, didn’t perform better than NTFS here.

File.WriteAllText(uniqueFileName, content)

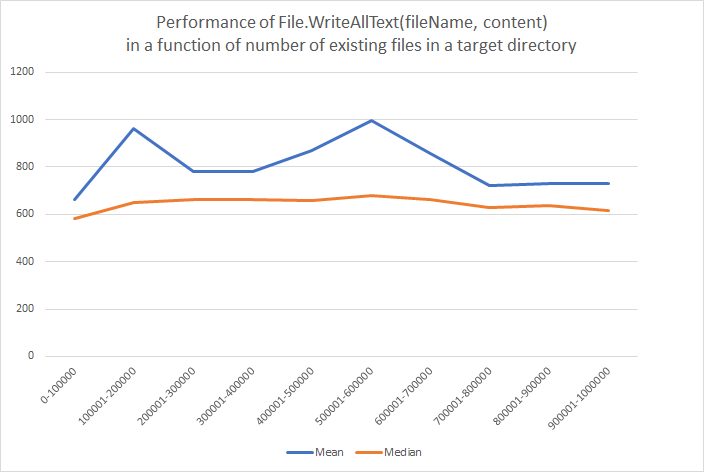

The other test consisted of creating a million small (10 bytes in size) text files in a single directory, and measuring if the performance degrades with each file written.

My conclusion here is that the performance of File.WriteAllText() was consistent, regardless of whether the folder where we create a new file is empty or contains 1,000,000 files already.

Test setup

The tests were performed using:

- A .NET 8 Console Application built in the Release mode

- Windows 11

- 32 GB RAM

- Ryzen 3700X CPU

- Two partitions:

- One of them formatted as NTFS on Samsung SSD 850 Evo 500GB,

- Another one was set up as a DevDrive, utilizing ReFS file system on Samsung SSD 980 Pro 1TB.

Of course, many factors can impact the performance of disk operations, like SSD caches, OS caches, or antivirus software activity, so results may vary in different configurations.

Summary

I found no evidence to support a worry that single-file operations in a folder containing many files are significantly slower than in folders containing fewer files (at least in the tested environment).

There is a small performance degradation with File.Exists() when the number of files grows. It’s small and could only be relevant if we test for files’ existence very frequently. For most of us, it seems negligible and not worth complicating the design to work around such issue.