What is OpenAI Batch API?

The pricing page for OpenAI services has an annotation that drew my attention recently:

*Batch API pricing requires requests to be submitted as a batch. Responses will be returned within 24 hours for a 50% discount.

I pretended not to see this option for a while because using batch jobs generally complicates the code 🙂 But 50% is a significant discount. I mostly use OpenAI GPT models for batch operations that are not time-sensitive, so it looks useful. I also wanted to see how the API behaves in the real world. Here, I’ll share what I’ve learned with you.

Learning #1: Batch API doesn’t seem a first-class citizen in .NET SDKs (at the moment)

I’m typically using one of two .NET libraries to access OpenAI APIs from my C# applications:

- OpenAI (tested at 2.0.0-beta.8) is the official library. I use the pre-release version because the stable one is dated a few months back.

- Betalgo OpenAI—This one is a beautifully maintained community library that is up to speed and has issues that are being resolved.

I attempted to run a batch job using the official library, OpenAI. It has a class named OpenAI.Batch.BatchClient for this purpose. Here’s the code I ended up with:

static async Task Main(string[] args)

{

// Read API Key from User Secrets (Visual Studio: right-click on project -> Manage User Secrets)

var configuration = new ConfigurationBuilder().AddUserSecrets<Program>().Build();

var apiKey = configuration["OpenAiDeveloperKey"] ?? throw new ArgumentException("OpenAPI Key is missing in User Secrets configuration");

// Create a client for the OpenAI Batch API

BatchClient batchClient = new(new ApiKeyCredential(apiKey));

// Create a batch request

// `OpenAI` library doesn't deliver a strongly-typed model (yet?),

// so property names come directly from docs at/ https://platform.openai.com/docs/api-reference/batch/create

var batchRequest = new

{

// file with requests was uploaded manually at https://platform.openai.com/storage/files

// example of file content: https://platform.openai.com/docs/guides/batch/1-preparing-your-batch-file

input_file_id = "file-f8fB0yH04nq9QmiWYYBtyYKC",

endpoint = "/v1/chat/completions",

completion_window = "24h", // currently only 24h is supported

metadata = new

{

// available later via Batch API, although not exposed in the UI, so usefulness is questionable

description = "C# eval job test"

}

};

var response = await batchClient.CreateBatchAsync(BinaryContent.Create(BinaryData.FromObjectAsJson(batchRequest)));

var rawResponse = response.GetRawResponse();

Console.WriteLine(rawResponse.Content);

// this only writes METADATA about the batch job to the output; the job might take up to 24h to complete

// Metadata is something like:

//{

// "id": "batch_lF3mjwKIDUusSrf43nCnkqOz",

// "object": "batch",

// "endpoint": "/v1/chat/completions",

// "input_file_id": "file-f8fB0yH04nq9QmiWYYBtyYKC",

// "status": "validating",

// "created_at": 1722955749,

// "expires_at": 1723042149,

// (...)

// "metadata": {

// "description": "C# eval job test"

// }

//}

}

Code language: C# (cs)So currently the BatchClient class has limited functionality. The library handles authentication but not much more. It lacks a strongly typed model to help define batch jobs. It’s still a pre-release, so it’s hard to say if it stays that way by design or if developers will extend the functionality a bit.

Using it is arguably better than working with HttpClient directly. But I think there’s still an opportunity to build a good abstraction over the Files API (batch job jas to be uploaded as a file) and Batch API to make the code less verbose and more fun.

The alternative library, Betalgo OpenAI, includes a .NET type for Batch requests but is also a thin wrapper. It accepts strings (where it could accept enums) and doesn’t seem to make any higher-level abstraction over Files and Batch. I couldn’t find any examples of Batch usage in the docs, so I might not have a full picture. However, I scanned the code on GitHub and found nothing related.

So, to summarize, you can use Batch API in C# today. It works, but it’s not very different from assembling the HTTP request yourself.

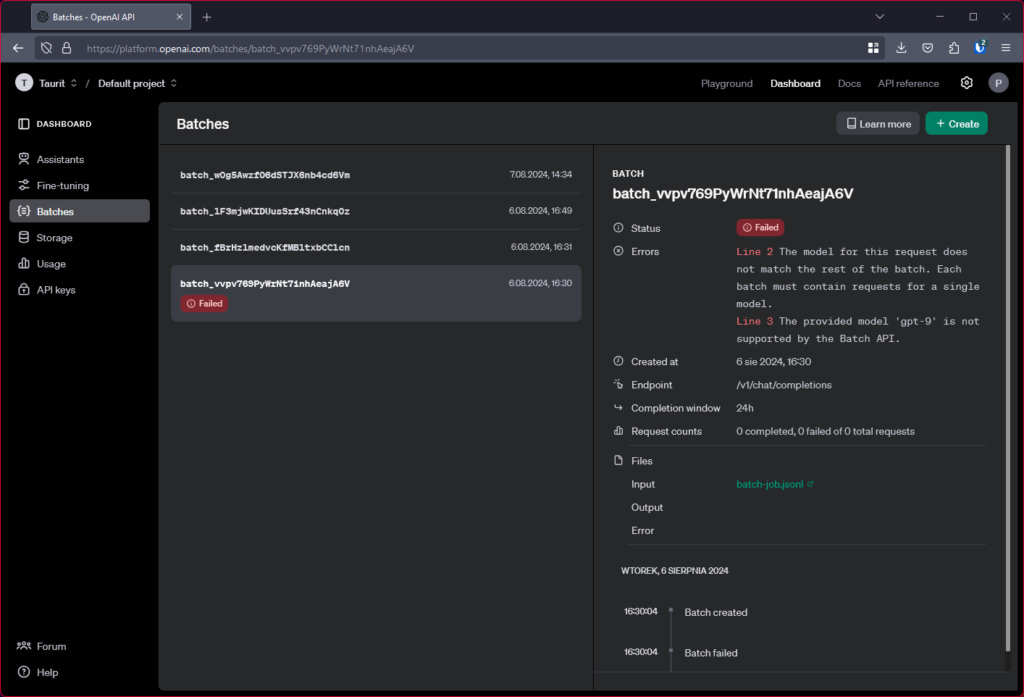

Learning #2: OpenAI has good web UI for Batch jobs and Storage

On the side of good news, I discovered a neat Web UI for batch jobs and storage. You can upload the job file within your browser, monitor its status, and download the result without coding.

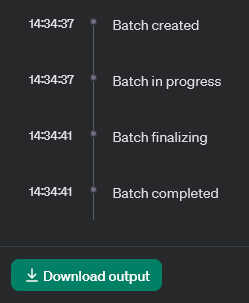

Learning #3: results are often available pretty fast!

The most interesting observation is probably how fast the results are typically available. The deal is we get a 50% discount, but the results are only guaranteed to be there within a 24-hour window.

I only ran a few tests so far, but I was happy that the results were available within seconds!

This doesn’t prove much. I might have been lucky to use the service outside the peak demand time. It absolutely doesn’t mean that I recommend using it in interactive applications. But it gives hope that if we use batch jobs to process some data we need later, we won’t have to pause work for 24 hours but can typically continue later that day.

Learning #4: there are some quirks

I found some unexpected behaviors, too. Just for context, this is an example input for the batch job, defining two jobs:

{"custom_id": "request1", "method": "POST", "url": "/v1/chat/completions", "body": {"model": "gpt-4o", "messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "Hello world!"}],"max_tokens": 200}}

{"custom_id": "request2", "method": "POST", "url": "/v1/chat/completions", "body": {"model": "gpt-4o-mini", "messages": [{"role": "system", "content": "You are an unhelpful assistant."},{"role": "user", "content": "Hello world!"}],"max_tokens": 200}}Code language: JSON / JSON with Comments (json)All requests in batch must share the same model

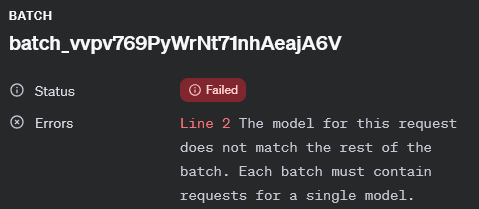

Even though the batch input file requires specifying the model for each task (in my example, it’s "model": "gpt-4o-mini"), it will fail if the model is not the same for all tasks in a batch:

Probably, this is to future-proof the contract, but it makes it confusing at the moment (why allow inconsistent input in the first place?)

Some input parameters are redundant

There are some parameters in the Batch creation model and request input object which can only take one value, for example:

// in batch API input model:

{

"completion_window": "24h", // this is the only allowed value

...

}

// in batch definition file

{

"method": "POST", // Currently only POST is supported

...

}

Code language: JavaScript (javascript)It also looks like an attempt to prepare APIs for future features, although such parameters seem unnecessary from the API design perspective today.

Summary

Integrating a .NET application with OpenAI Batch API requires some time to build a higher-level abstraction over the Files and Batch APIs, but it’s not rocket science. Existing libraries help, although there is still potential to go further.

The discount is huge, so if you process a significant amount of data and don’t need an immediate response (for an interactive experience), this is worth the attention!

No comments yet, you can leave the first one!