Generative AI endpoints might return Base64-encoded images

Recently, I explored the possibility of generating images using locally hosted Stable Diffusion models. Thanks to the AUTOMATIC1111 stable-diffusion-WebUI project, I got the local API working pretty quickly.

However, one aspect of working with such APIs is that images come base64-encoded in a JSON property, and one can’t easily see a preview of what was generated. This prevents us from experimenting easily.

// example of a response from Stable Diffusion txt2img API

// (POST http://localhost:7860/sdapi/v1/txt2img)

{

"images": ["VERY-LONG-BASE64-BLOB-WITH-IMAGE..."],

"parameters": {

"prompt": "masterpiece,best quality,<lora:tbh323-sdxl:0.6>,cat in fisheye,flowers,<lora:tbh123-sdxl:0.2>,paint by Vincent van Gogh,",

"negative_prompt": "lowres,bad anatomy,bad hands,text,error,missing fingers,extra digit,fewer digits,cropped,worst quality,low quality,normal quality,jpeg artifacts,signature,watermark,username,blurry,nsfw,",

"batch_size": 3,

"sampler_name": "DPM++ 2M",

// ...

}

}

Code language: JSON / JSON with Comments (json)One solution is to write a helper application to display images and work around this issue. Another is to keep using the tools we normally use to test HTTP APIs (for me, it’s Insomnia) and extend their functionality with a plug-in 😉

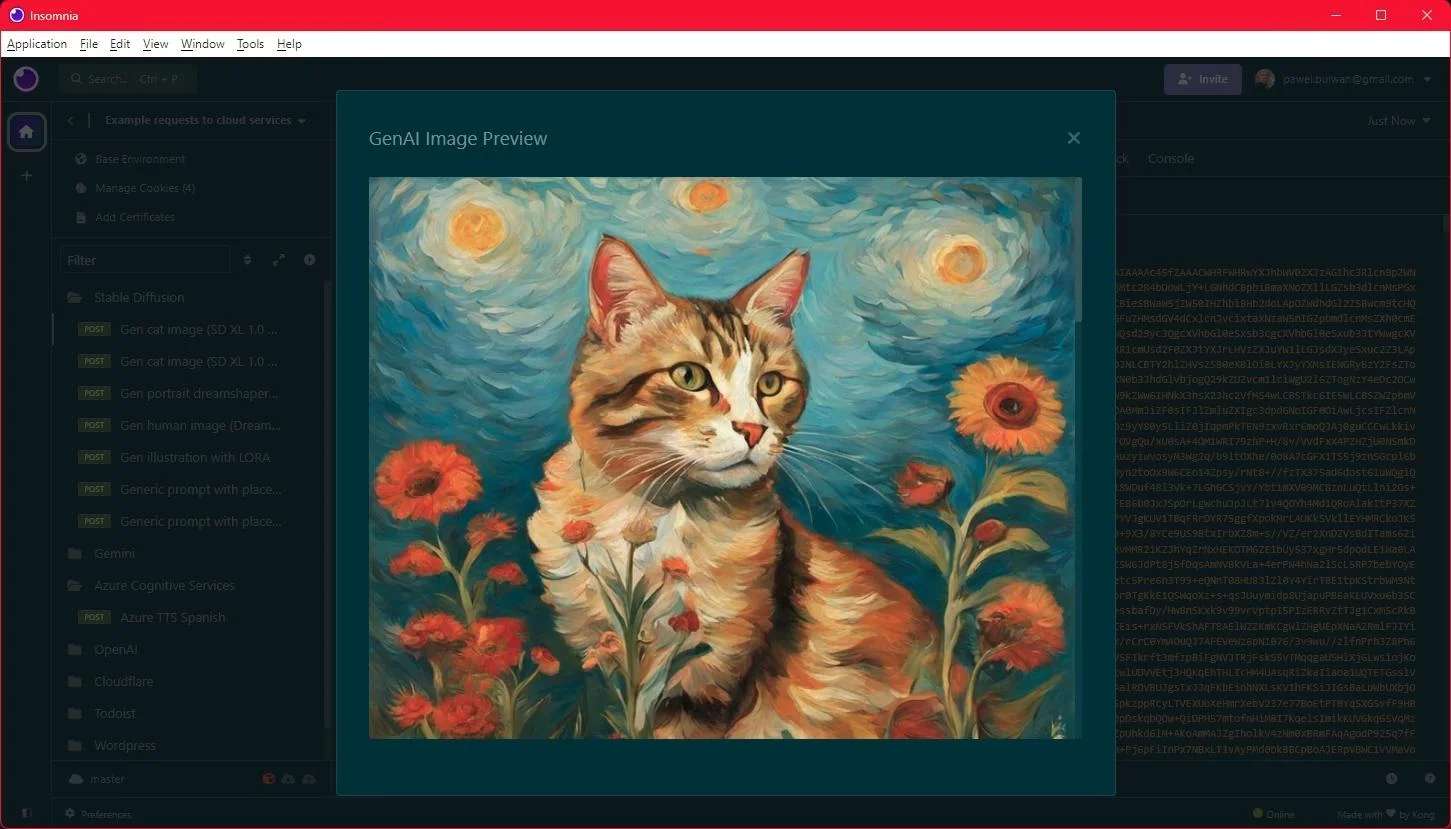

Previewing generated images in Insomnia

I ended up writing a short plugin to display a preview of generated images when we receive the response from a supported endpoint. It’s called insomnia-plugin-genai-image-preview. It is now available in Insomnia’s Plugin Hub.

If Google brought you here and this is what you were looking for, take it for a spin! It’s really just a few lines of code, and the support is limited to Stable Diffusion Web UI and Dall-E APIs. But if there is demand for it, it might support more technologies soon 🙂

No comments yet, you can leave the first one!