TL/DR: JSON mode remains available as an API feature, but the newest models include the Structured Outputs feature, which solves this problem 100% without workarounds needed.

JSON Mode vs Structured Output

When we integrate our applications with chatbot-like API, we often prefer to work with data in a structured format like JSON rather than natural language.

When I first drafted this article, JSON Mode was the only option for enforcing guardrails on OpenAI’s Chat Completion API output format. Since last week, a new and more robust tool has been available for this purpose: Structured Output. So let’s clarify the difference:

- JSON mode is a simple flag to tell the API you expect the response to be in JSON format. It improves model reliability for generating valid JSON outputs but does not guarantee that the model’s response will conform to a particular schema.

- Structured Outputs is a newer feature designed to ensure model-generated outputs will exactly match the JSON Schema provided by the developer.

In this article, I’ll show some behaviors of JSON mode, but please be aware that there might be a better option than was available at the time of writing.

Exploring JSON mode and its pitfalls

Why simply asking for JSON output in the prompt was not enough

As a starting point, let me show how I used to solve the issue before Chat Completion API had any parameters allowing it to steer it toward generating JSON output. Here is an example prompt:

You are a teacher of English language. Explain words from a given list.

Provide response in a JSON format.

Output should be an array of objects. Each object in array should contain properties: EnglishWord, EtymologyExplanation.

Example of the correct output:

```json

[

{ "EnglishWord": "hi", "EtymologyExplanation": "Hi developed from the Middle English \"hy\", similar to \"hey\" and \"ha\". " }

]

```

The input to process is:

- cat

- mouse

Code language: PHP (php)And this worked okay most of the time. However, the output format wasn’t stable, and the response format could deviate from a correct JSON in a few ways. Let me combine a few of the mistakes I encountered into a single example:

Certainly! I will explain the etymology of the words "cat," and "mouse" and provide the response in the specified JSON format.

```json

[

{

"EnglishWord": "cat",

"EtymologyExplanation": "The word "cat" has a rich history and comes from the Old English word 'catt,' which was influenced by the Late Latin term 'cattus.' It's thought that 'cattus' was introduced to Old English through early Christian writings. The domestication of cats dates back to ancient times, with the word 'cat' remaining relatively consistent in its form and meaning throughout its history."

},

{

"EnglishWord": "mouse",

"EtymologyExplanation": "The word 'mouse' has its origins in Middle English 'mous,' which is derived from Old English 'mūs.' This word can be traced back further to Proto-Germanic 'mūs,' and ultimately to the Proto-Indo-European root '*(s)meus,' meaning 'mouse' or 'small rodent.' 'Mouse' has been used to describe these small mammals for centuries, and the word has undergone minimal changes in its spelling and pronunciation over time."

}

]

```

These explanations provide a brief overview of the etymology and historical development of the words "cat," and "mouse" in the English language.

Code language: JSON / JSON with Comments (json)The problems with the above output are:

- The actual JSON is preceded and followed by unstructured text.

- The actual JSON is wrapped in a

```jsonblock (here, you could argue I asked for it, but it might happen regardless of it) - The JSON contains unescaped quote marks around the word “cat”, which makes the syntax invalid – this happens even with the recent gpt4-turbo model

We can solve the first two issues on the application side with heuristic preprocessing (even though we’ll still pay for those useless parts of the output). But the third problem – invalid syntax – isn’t easily solvable. While it might occur only sporadically, keeping apps reliable with such variance in the output is a challenge.

JSON Mode for the rescue

JSON mode made a promise to solve all those issues:

When JSON mode is enabled, the model is constrained to only generate strings that parse into valid JSON object.

Source: Open AI API – Text Generation – JSON mode

(…) JSON mode will not guarantee the output matches any specific schema, only that it is valid and parses without errors.

We still need to describe schema in a natural language, but then we get guarantees about the output adherence to JSON specification. Let’s enable it, then! It’s as easy as declaring a single option in the request:

POST https://api.openai.com/v1/chat/completions

{

"model": "gpt-3.5-turbo-1106",

"response_format": {

"type": "json_object"

},

"messages": [

{

"role": "system",

"content": "You are a teacher of English language."

},

{

"role": "user",

"content": "..."

}

]

}

Code language: JSON / JSON with Comments (json)So, what output would it generate for my previous example? Let’s see:

{

"words":[

{

"EnglishWord":"cat",

"EtymologyExplanation":"The word 'cat' comes from the Old English 'catt', which is of unknown origin. It is believed to have come from other European languages such as Latin 'cattus' and German 'katze'."

},

{

"EnglishWord":"mouse",

"EtymologyExplanation":"The word 'mouse' originated from the Old English word 'mus', and is related to similar words in other Germanic languages such as German 'maus' and Dutch 'muis'."

}

]

}

Code language: JSON / JSON with Comments (json)That looks better, but… this is not the schema I requested! I asked for an array in response. I even gave it an example, but it responded with an object with my expected array placed in some made-up “words” property.

Learning #1: in JSON mode, we still have no guarantees of schema adherence

The first observation is that the schema might differ even if we provide an example of the expected output in the prompt.

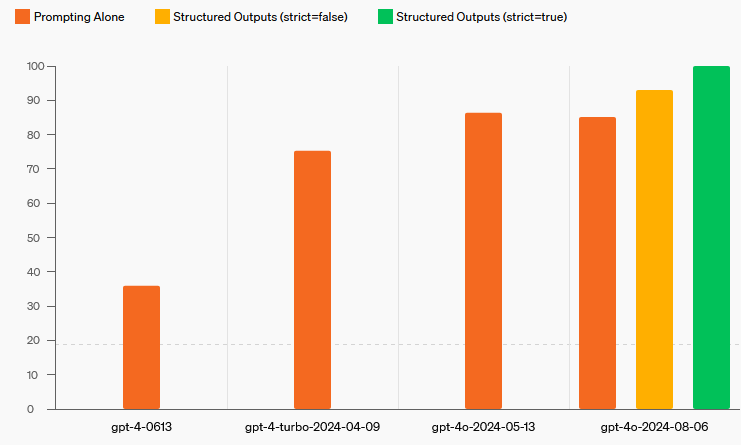

OpenAI measures it. For the recent models, prompting alone results in correct schema only in about 85% of the responses, and enabling JSON mode improves it to about 93%. But to guarantee schema adherence, we need to use Structured Outputs.

Learning #2: asking for an array as a root element is a particularly bad idea

The fact that the API cannot return an array seems to be a common observation on forums. So, to make the API more reliable, it’s better to use an object rather than an array:

// array as root element causes adherence problems

[

{"element1": "value1"},

{"element2": "value2"}

]

// having an object at the root can improve reliability, based on observations

{

elements: [

{"element1": "value1"},

{"element2": "value2"}

]

}

Code language: JSON / JSON with Comments (json)Summary

JSON mode is a useful improvement over describing JSON output in a prompt. It increases the chance that output will conform to the provided JSON schema. Enabling it is really simple. I found it’s often reliable enough to call the Completion API thousands of times and receive a response in a proper JSON format, matching the output example I provided.

It’s still important to remember that schema adherence is not guaranteed. For the guarantee, we now have the “strict” Structured Outputs feature.

No comments yet, you can leave the first one!