In Docker, it is common to build your containers upon a base image from a third party. For example, a Dockerfile for .NET project usually starts with a code similar to:

FROM mcr.microsoft.com/dotnet/aspnet:7.0 AS base

WORKDIR /app

EXPOSE 80

#... more steps to build the project etc.

Code language: Dockerfile (dockerfile)Now, for a long time, I assumed that when I build an image from such a Dockerfile, it will use the most recent available versions of base images like mcr.microsoft.com/dotnet/aspnet:7.0.

I typically built containers like here:

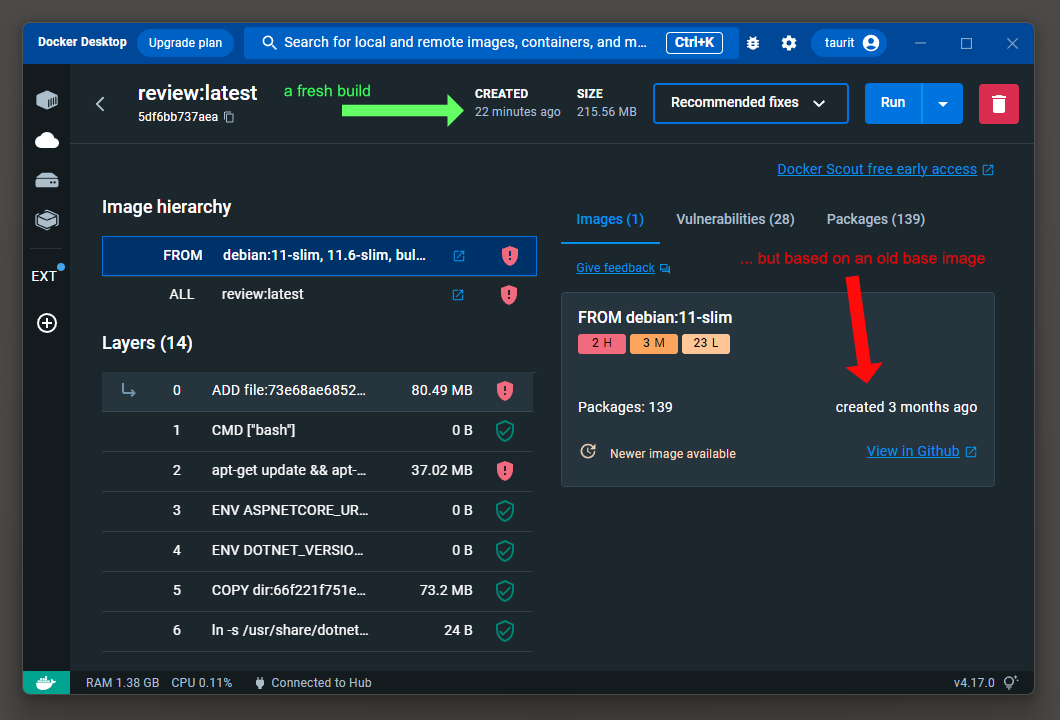

docker build -t myimagename:latest .Code language: plaintext (plaintext)But then I noticed that a newly built image was based on a 3-months-old, cached versions of a base image!

We would rather not run our software on an outdated system, right? Researchers constantly find vulnerabilities in base images. Whenever they patch one, they re-build the image and upload a new version to the server.

On the other hand, Docker’s behavior seems reasonable. The version tag in the base image name, aspnet:7.0, did not change. Docker has no reason to think that the cached version was any different from the identically named version on a server.

So, how do we hint Docker that we want it to use the most recent base image?

Update outdated Docker images on Linux and macOS

Goffity Corleone published a solution to update all docker images already pulled on Linux and macOS. If you use those systems, you can find an answer there!

Update outdated Docker images on Windows

So on Windows, you don’t normally have tools like grep, awk, xargs. I thought of writing an equivalent script in PowerShell that updates all images. But this would still be ugly as it would have to parse output from docker images which is formatted for humans, not machines (with no flag to control that currently). So, I don’t have such a script ready to copy and paste, sorry.

But I found 2 alternatives to achieve this goal, so I’ll share them:

Method 1: always use docker build --pull

I found that there is a --pull argument we can add to our build command:

docker build --pull -t mycontainername:latest .Code language: plaintext (plaintext)I noticed that when we add this argument:

- Docker CLI downloads (pulls) and uses the most recent base images specified in a

Dockerfileduring the build. Just as we want! - Docker CLI does not update the stored images, though. We still store an outdated image. Future builds without this flag will use the old base image.

A side note: if the Dockerfile contains any custom steps that download software or project dependencies, we might want to additionally use --no-cache to keep them current as well (at least for production builds).

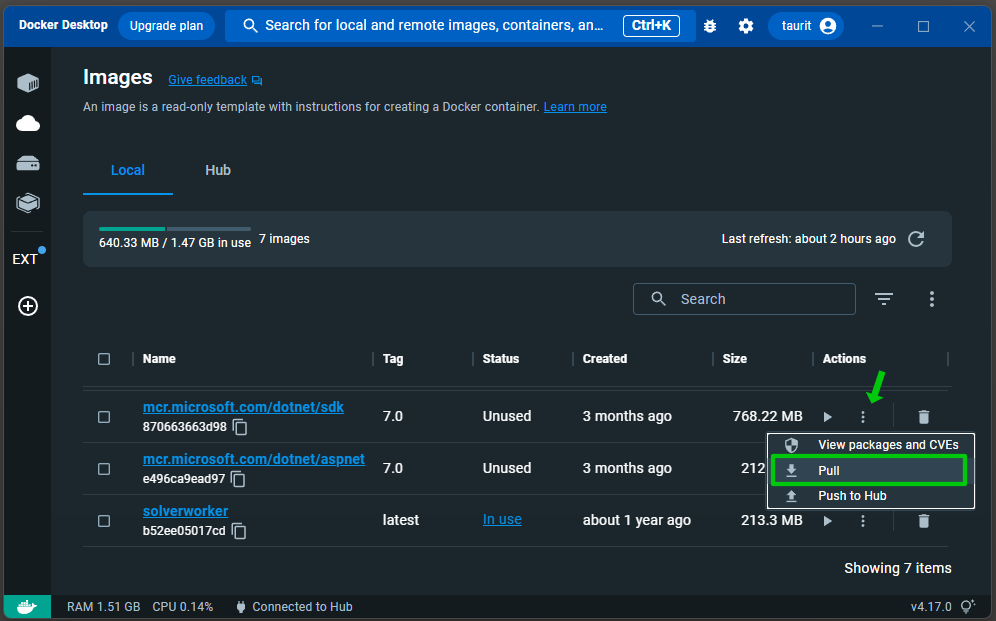

Method 2: update images one by one

The docker CLI doesn’t seem to have any command to update all obsolete images, so the easiest approach is to update them one by one. You can do it from the command line (docker image pull imagename:tag). But perhaps, Docker Desktop GUI allows doing that even quicker:

Pulling the most recent image will still keep the old image in the list as a dangling image, so you might also want to delete it. You probably won’t ever need that anymore, and I would normally clean up such unused images.

If you have any thoughts on this topic, please share in the comment!

No comments yet, you can leave the first one!